Hello @23f3000345 ,

Let me try to explain everything in a more intuitive way

Simple Convolution

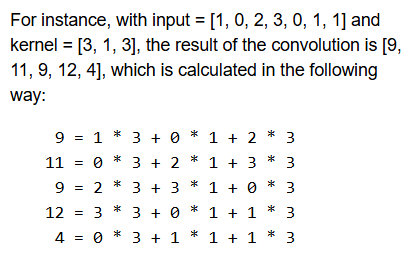

So from the image you shared, the basic convolution is done like this:

kernel = [3, 1, 3]

input = [1, 0, 2, 3, 0, 1, 1]

output = [9, 11, 9, 12, 4]

Which is:

output[0] = 1*3 + 0*1 + 2*3

output[1] = 0*3 + 2*1 + 3*3

...

That’s standard convolution, where:

- Kernel is applied left-to-right

- We slide it over input

- We use padding (with 0s) so that we can apply it even at the edges

Now, the *"start at kernel[0]input[10]" kind of behavior feels strange because it’s not this “standard” form. That’s circular convolution, with a reversed kernel.

Now Let’s Decode This Formula:

index = (i + (width - k) - offset) % N

Let’s understand each piece step by step.

i: output index

You’re computing output[i].

k: position in kernel (e.g., k=0 is the first kernel element)

width - k: This flips the kernel

Think of it like we want to align the end of the kernel with the current position i.

If kernel = [a, b, c], then flipped it becomes [c, b, a].

offset: Where to center the kernel

This helps you decide whether kernel[1] aligns with input[i], or maybe kernel[0] or kernel[2] does.

If offset = width // 2, the kernel is centered.

If offset = 0, it’s causal (kernel is fully behind the current index).

% N: Wraps the input (makes it circular)

So What Does It All Mean?

Think of it like this:

“To compute output[i], flip the kernel, shift it back by offset, and apply it to the input values wrapping around the array.”

So if:

width = 3 (kernel has 3 elements)offset = 1 → center the kernel at i- Then:

index = (i + (3 - k) - 1) % N

= (i + 2 - k) % N

So for each k = 0, 1, 2, you’re grabbing:

input[i + 2 - 0] → for k=0 → input ahead of iinput[i + 2 - 1] → for k=1 → input at i+1input[i + 2 - 2] → for k=2 → input at i

So this setup is applying the reversed kernel, centered at i.

Hope this helps clarify things a little bit!