Hi Community

I am currently doing the OpenCV Course and I am testing something with the HogDescriptor PeopleDetector().

I did the exercise 3.5 and with the example image it works fine.

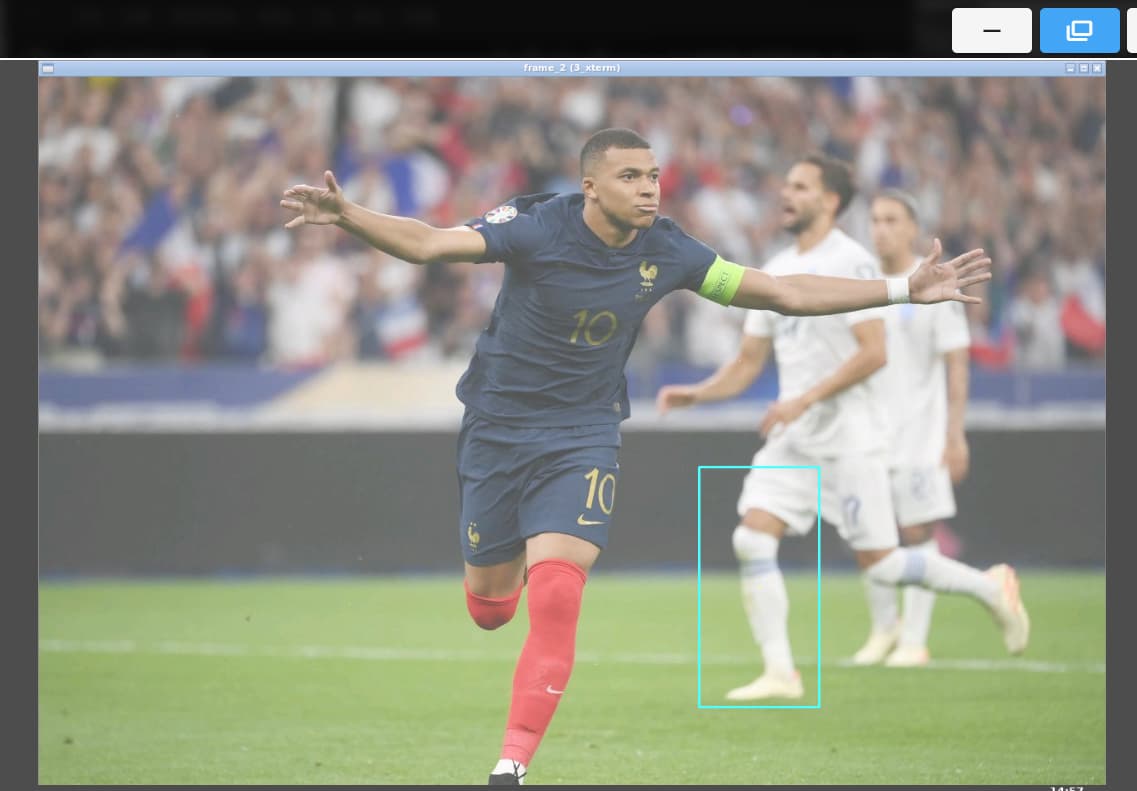

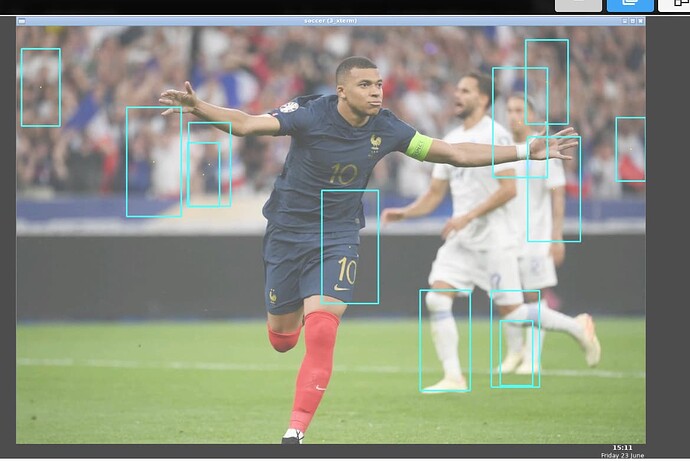

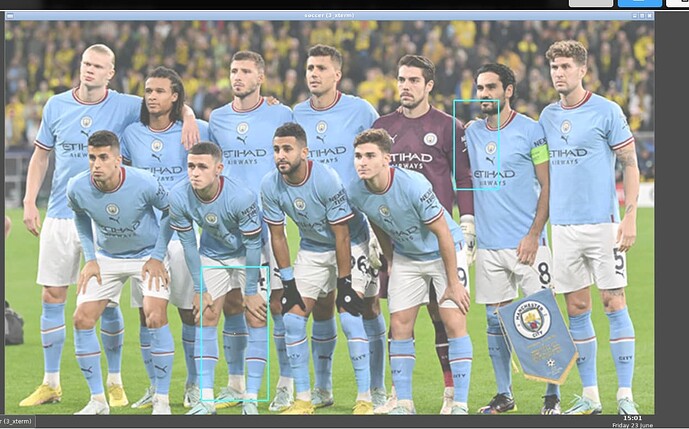

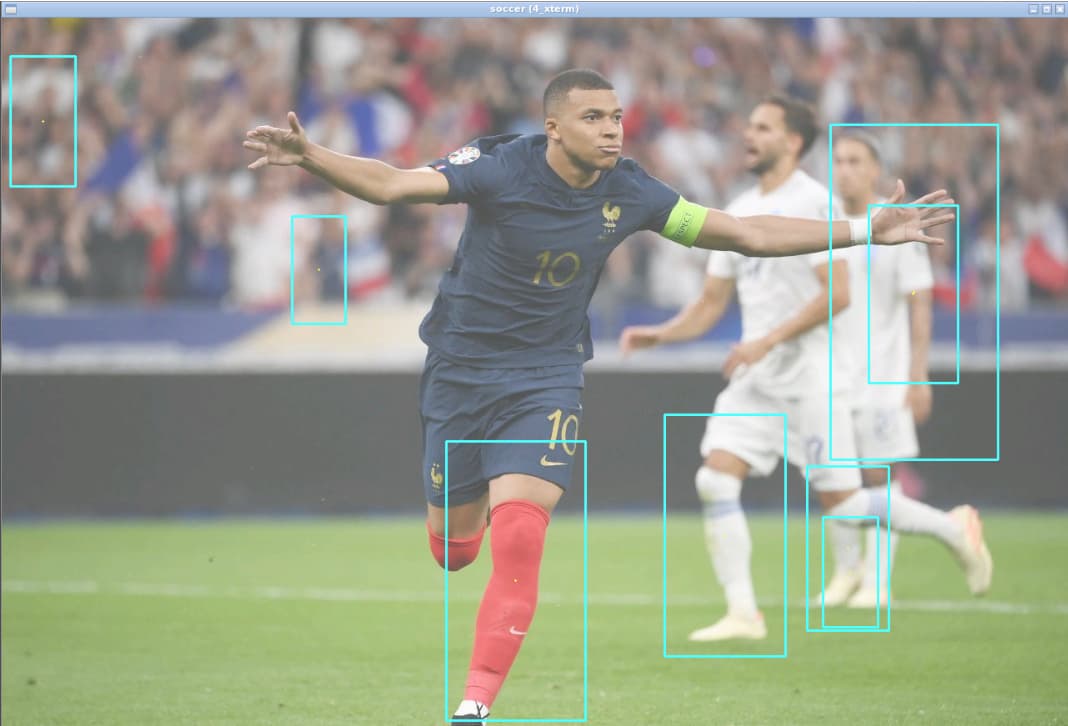

Now I am testing the same with some other images.

But with these images it does not detect correctly.

As far as I am concerned, the only parameters I can change are winStride, padding and scale, as well as the resize of the image.

I tried with different combinations of parameters, but I don’t get satisfying results. Does somebody know how to make the algorithm better, such that it detects the players correclty?

Here my code:

{

#!/usr/bin/env python

import rospy

from sensor_msgs.msg import Image

from cv_bridge import CvBridge, CvBridgeError

import cv2

import numpy as np

class LoadPeople(object):

def __init__(self):

self.image_sub = rospy.Subscriber("/camera/rgb/image_raw",Image,self.camera_callback)

self.bridge_object = CvBridge()

def camera_callback(self,data):

try:

# We select bgr8 because its the OpenCV encoding by default

cv_image = self.bridge_object.imgmsg_to_cv2(data, desired_encoding="bgr8")

except CvBridgeError as e:

print(e)

hog = cv2.HOGDescriptor()

#We set the hog descriptor as a People detector

hog.setSVMDetector(cv2.HOGDescriptor_getDefaultPeopleDetector())

img_nr = 1

if img_nr == 1:

img = cv2.imread('/home/user/catkin_ws/src/unit3_exercises/mbappe.jpg')

#resize

imX = 1280

imY = 921

img = cv2.resize(img,(imX,imY))

else :

img = cv2.imread('/home/user/catkin_ws/src/unit3_exercises/mancity.jpg')

#resize

imX = 1400

imY = 920

img = cv2.resize(img,(imX,imY))

#Hog

boxes, weights = hog.detectMultiScale(img, winStride=(2, 2),padding=(8, 8), scale=1.01)

boxes = np.array([[x, y, x + w, y + h] for (x, y, w, h) in boxes])

for (xA, yA, xB, yB) in boxes:

#Center in X

medX = xB - xA

xC = int(xA+(medX/2))

#Center in Y

medY = yB - yA

yC = int(yA+(medY/2))

#Draw a circle in the center of the box

cv2.circle(img,(xC,yC), 1, (0,255,255), -1)

# display the detected boxes in the original picture

cv2.rectangle(img, (xA, yA), (xB, yB),

(255, 255, 0), 2)

cv2.imshow('soccer',img)

cv2.waitKey(1)

def main():

load_people_object = LoadPeople()

rospy.init_node(‘load_people_node’, anonymous=True)

try:

rospy.spin()

except KeyboardInterrupt:

print(“Shutting down”)

cv2.destroyAllWindows()

if name == ‘main’:

main()

}

Thanks in advance for any help