Hello World,

I am facing an issue with the “ROS2 Basics in 5 Days (Python)” project. Specifically, the problem occurs in the second part, “Find Wall.” In this section, we are tasked with writing a service server. When this service is called, it should orient the robot to its right side to initiate the wall-following behavior. While the code works Perfectly in the simulation, it encounters issues in the real-world lab setting.

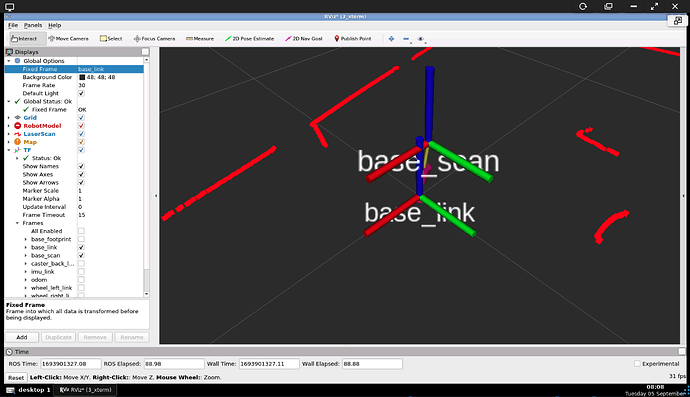

Firstly, my logic for finding the wall is as follows: The robot rotates until it identifies the closest wall or until the laser_min_index reaches 360 degrees. Then, it moves forward until the distance to the wall is less than 0.3 meters. At this point, the robot stops and rotates until the laser_min_index becomes 270, ensuring that the robot’s right side is aligned with the wall. This logic works perfectly in the simulation.

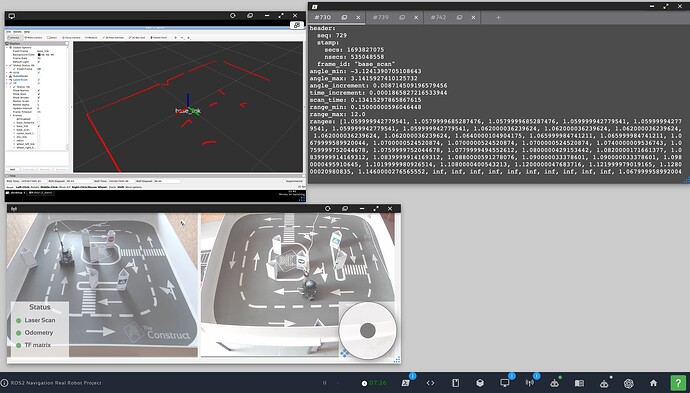

However, in the real robot lab, I’m facing several issues. First, the laser scan range is not 360 degrees; it is actually 719 degrees. Second, as the robot moves toward the wall, the distance reading from the laser actually increases instead of decreases. This leads to the robot getting too close to the wall and eventually crashing into it. Lastly, I’m encountering numerous problems related to the sensor readings.

I hope someone can understand the issues I’m facing and offer some guidance. Feel free to ask for more information.

Thank you.